EPICS Collaboration Meeting

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

The Experimental Physics and Industrial Control System, EPICS, is a set of open-source software tools, libraries and applications developed collaboratively and used worldwide to create distributed control systems for both scientific instruments and industrial applications such as particle accelerators, telescopes, experiment beam lines and other large scientific experiments.

The EPICS Collaboration Meetings provide an opportunity for developers and managers from the various EPICS sites to come together and discuss their work and inform future plans. Attendees can see what is being done at other laboratories, and review plans for new tools or enhancements to existing tools to maximize their effectiveness to the whole community and avoid duplication of effort.

The Spring 2025 EPICS Collaboration Meeting and related workshops and training events will take place between Monday 7 April and Friday 11 April 2025 at the Rutherford Appleton Laboratory. The ISIS Neutron and Muon Source is leading the organisation of this event with cooperation from the Diamond Light Source and Central Laser Facility.

We are providing details to support visa applications, and will advise on travel, accommodation, and dining arrangements.

We look forward to seeing you in Oxfordshire in the spring.

Our Sponsors:

-

-

08:30

→

10:00

Registration 1h 30m R112 Visitor Centre

R112 Visitor Centre

Registration will take place in the R112 Visitor Centre.

Non-STFC staff will need to make themselves known at R75 Main Reception before proceeding on-site. Arrangements will be made for those arriving from a conference hotel by one of the provided buses.

-

10:00

→

11:00

Welcome 1h Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKKeynote speech by Roger Eccleston, STFC Executive Director, National Laboratories: Large Scale Facilities and Head of Rutherford Appleton Laboratory.

Safety guidance, guide to facilities, meeting rooms, and other arrangements.

-

11:00

→

17:00

EPICS Plenary Session Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UK-

11:00

Status Update on transition to EPICS at the ISIS Source 20m

We report on the progress in transitioning the ISIS source from our current control system to a PVAccess based implementation of EPICS. On the frontend efforts continue to improve automatically converted HMIs, and to improve usage of Phoebus services such as Save-and-Restore. We report on our progress in deploying and using our first conventional EPICS IOCs, and on the problems encountered in this area.

Speaker: Ivan Finch (STFC) -

11:20

EPICS Diode – One-Way Data for Secure Remote Participation 20m

To support ITER’s remote participation plans while honoring cybersecurity requirements, we are developing the “EPICS Diode”, mirroring EPICS PVs through hardware devices allowing strictly one-directional network traffic.

We present the concept, implementation and status, showing the first results of scalability and performance measurements, possible enhancements and the next planned steps.Speaker: Ralph Lange (ITER Organization) -

11:40

How to teach an old Tokamak new tricks 20m

New requests and requirements for ASDEX Upgrade (AUG) diagnostics are bringing new life to the ASDEX Upgrade Tokamak. The AUG team is fighting daily with the problems of any long-lived scientific facility. The ASDEX Upgrade infrastructure, referring to both software and hardware, has been mostly created in-house. It is no longer feasible to just extend and maintain the old legacy systems. However, AUG still maintains a regular schedule of operation, and is expected to run for at least 10 more years, with typically three months of downtime between campaigns. To tackle this challenge, the AUG team is looking at the ITER model as a robust reference for long-term maintenance. To continue serving AUG operations, small upgrades are being deployed in parallel to legacy services to prove the technology and ensure a smooth transition. Following an ITER-like model EPICS is being introduced with a twist: rather than deploying full EPICS at once, small container services are deployed for diagnostics. EPICS device support is developed using ITER Nominal Device Support (NDS v3), connecting it directly to the ASDEX Upgrade Discharge Control System (DCS). At the same time, new EPICS-supported d-tacq devices are being deployed to bridge the gap before jumping to MTCA hardware. Overall, this stepwise approach is already routinely delivering scientific data during AUG operation and provides a comprehensive roadmap to completely renewed AUG diagnostics. In this talk, we share this approach and our current experiences as an established yet evolving facility deploying EPICS and MTCA for the first time.

Speaker: Miguel Astrain Etxezarreta (Max Planck Institute for Plasma Physics (IPP)) -

12:00

Testing for SARAF Project: EPICS CI/CD Pipeline Insights 20m

In this presentation, I will explore the testing strategies and challenges encountered during the SARAF accelerator Phase II project, developed by CEA-Irfu for SNRC (Soreq NRC) in Israel. The SARAF control system, based on the EPICS framework, required robust, scalable, and automated testing to ensure smooth integration and reliable performance.

The talk highlights various facets of the testing framework, including EPICS tests with WeTest, critical system tests, and continuous integration pipelines using GitLab CI/CD. I will explain the importance of delivery tests, test execution in production, and the automation of key tasks such as syntax checking, log parsing, and GUI validation. Additionally, insights into optimizing CI pipelines, managing large archives, and lessons learned from real-world deployments will be shared.

Speaker: Victor Nadot (CEA) -

12:30

Lunch 1h

-

13:30

Extreme Photonics Application Centre (EPAC) status report 20m

EPAC will be driven by a 10Hz Petawatt laser using novel technology developed at the Central Laser Facility. Through changing parameters such as target material and geometry, applications can switch between generating high-energy x-rays and beams of high-energy electrons, protons, ions, neutrons and muons, to enable multi-modal imaging and probing capabilities.

This talk will give a brief overview of the facility, and update on the design and commissioning of the EPICS control system.

Speaker: Chris Gregory (STFC) -

13:50

Data Acquisition and Management for Machine Physics and Experimental Beamlines with EPICS 20m

Data acquisition architectures are full stack problems that impact instrumentation, FPGA based devices, timing distribution, drivers to integrate instrumentation, EPICS for online configuration and monitoring, fast storage of data, export and management of data. This report touches on the architecture and performance of system that have been deployed and a data management system that is under development.

Speaker: Leo Dalesio (Osprey Distributed Control Systems) -

14:10

New Features in ADTimePix3 Controls for Neutron Detection 20m

The TimePix3 detector, developed by the Medipix collaboration, has emerged as a powerful tool for neutron detection applications at Department of Energy (DOE) National User Facilities, including the Spallation Neutron Source (SNS) and High Flux Isotope Reactor (HFIR). This presentation introduces new features and improvements in the EPICS area detector driver (ADTimePix3), specifically designed for neutron detection experiments.

Recent developments focus on optimizing the driver for weak neutron signals through several key innovations. A dual raw .tpx3 channel system has been implemented, with one channel dedicated to real-time neutron processing that maintains compatibility with existing neutron detector infrastructure. The preview channel now offers enhanced functionality, including summation and averaging capabilities for low count rate experiments, while ongoing development includes direct computation of Time-of-Flight (ToF) histograms and advanced hardware triggering acquisition modes.

To address data rate challenges, we have developed an Advanced Mask Generation and Control system. This innovative feature provides an areaDetector image mask system supporting arbitrary shapes through circular and rectangular elements for both single-chip and quad-chip detector configurations. The system includes Binary Pixel Configuration vector generation with automatic file generation and upload from EPICS waveforms, enabling flexible and efficient data collection.

Radiation effects mitigation has emerged as a critical need in neutron experiments, as high-energy radiation can cause various detector artifacts such as temporarily activated "hot" pixels, non-counting double columns, and elevated count rates in specific chips. We have developed correction and mitigation tools to address these radiation-induced effects, which are particularly important in high-radiation neutron experiments.

This work represents a significant step forward in the integration of advanced detector technologies into the EPICS control system framework. Future work will focus on further refinement of calibration and equalization methods to optimize detector performance in DOE's Scientific User Facilities.

This research used resources at the High Flux Isotope Reactor and the Spallation Neutron Source, DOE Office of Science User Facilities operated by the Oak Ridge National Laboratory. This work was supported by the U.S. Department of Energy, Office of Science, Scientific User Facilities Division under Contract No. DE-AC05-00OR22725.

Keywords: EPICS, areaDetector, neutron, X-ray

Acknowledgments: Fumiaki Funama, Greg Guyotte, Bogdan Vacaliuc, Zach Thurman, James Kohl, Vladislav Sedov.References:

[1] https://github.com/areaDetector

[2] https://github.com/areaDetector/ADTimePix3Speaker: Kazimierz Gofron (Oak Ridge National Laboratory) -

14:30

The EPAC camera system 20m

Laser facilities such as EPAC use cameras as the primary diagnostic for alignment and system monitoring. In a large facility there can be over a hundred of these cameras streaming images from different areas to a central control room, and operators may have an interest in observing tens of image sources simultaneously. This talk will give an overview of the configuration used in EPAC to attempt to achieve this, performance results so far using both Channel Access and pvAccess, and raise some of the challenges that we still have.

Speaker: Chris Gregory (STFC) -

14:50

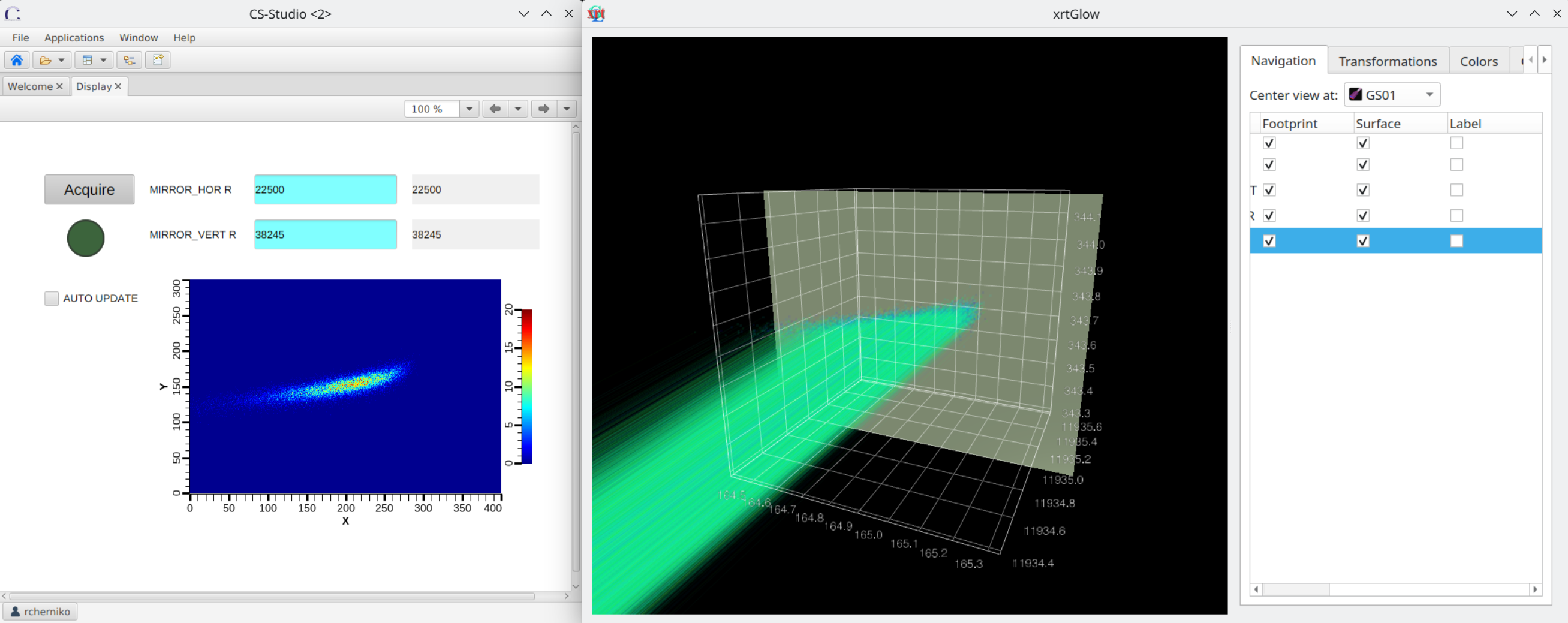

Bridging Simulation and Reality: EPICS controls for xrt Beamline Digital Twins 5m

Synchrotron beamline simulation codes have long been essential for designing new beamlines and troubleshooting existing ones. However, there remains a noticeable gap between the idealized simulation results and the performance of real beamlines. xrt X-ray tracing package addresses this challenge by employing a global coordinate system for positioning and orienting optical elements, along with embedded support for material properties, thereby introducing the flexibility needed to realistically simulate beamline imperfections.

To bring the simulation even closer to the real-world experience, we have employed the pythonSoftIOC library to integrate the support for EPICS controls across the entire beamline model — from the source to the detector. This integration enables end users to leverage familiar tools like CSS and Phoebus to monitor and control the beamline digital twin in near real-time, thanks to the efficiency of the xrt code. Furthermore, when combined with higher-level tools like Bluesky and Blop, beamline scientists can evaluate optimization tasks with high fidelity. Users can determine realistic beam dimensions, shapes, and intensities at various positions and estimate the data requirements for training optimization agents before applying these insights to the real beamline.

The entire process can be monitored live and in detail using the 3D beamline visualization tool, xrtGlow, providing a comprehensive and interactive environment that bridges simulation and reality.

Speaker: Dr Roman Chernikov (Brookhaven National Laboratory)

Speaker: Dr Roman Chernikov (Brookhaven National Laboratory) -

15:00

Coffee and Tea 45m

-

15:45

Leveraging EPICS for Control Software in the Electron-Ion Collider 20m

The Electron-Ion Collider (EIC) at Brookhaven National Laboratory plans to adopt EPICS as its control software, transitioning from the Accelerator Device Object (ADO)-based control system used by the Relativistic Heavy Ion Collider (RHIC). On the hardware side, the EIC intends to migrate its front-end electronics to Zynq-based general I/O boards. As a result, our ongoing efforts focus on developing a robust EPICS development and deployment environment capable of supporting a wide range of subsystems, integrating the general I/O boards, and ensuring interoperability between the EPICS-based control system and the legacy ADO-based system. This presentation will highlight the progress, challenges, and current status of these initiatives.

Speaker: Md Latiful Kabir (Brookhaven National Laboratory) -

16:05

EPICS Council Report 20m

This talk will cover recent activities of the EPICS Council on behalf of the EPICS Collaboration. Discussions from previous meetings have brought forward new ideas to improve communication in the community and be more effective planning for sustainability. Topics will be described, and time will be allowed for discussion.

Speaker: Karen White (Oak Ridge National Laboratory) -

16:25

OPC UA Device Support – Update 10m

A collaboration (ITER/HZB-BESSY/ESS/PSI) maintains and develops a Device Support module for integration using the OPC UA industrial SCADA protocol. Goals, status and roadmap will be presented.

Speakers: Dr Dirk Zimoch (Paul Scherrer Institut), Ralph Lange (ITER Organization) -

16:35

Using compress records in the real world 5m

The compress record is a simple but powerful tool for data processing in an EPICS system. This records can provide in the IOC post-processing of data that would otherwise require high level tools. Although having been a part of EPICS for a long, long time, this is perhaps not as widely understood or used as it might be. Having this post-processing done at the IOC rather than application level, allows the use of standard EPICS features such as Alarms, Archiving, trend-Plots, save-restore, etc. A real-world example of how this records can be used to measure integrated radiation dose over a number of time ranges will be shown.

Speaker: Steven Hunt (Lawrence Berkeley Laboratory) -

16:40

Phoebus in the Dark 5m

Our customer required dark-themed graphical user interfaces for their control system. To ensure a consistent look, the default colours of Control System Studio Phoebus had to be modified. While many UI elements in Phoebus could be adjusted through CSS, significant modifications were required in the source code to achieve the desired look. Key updates included defining a unified colour scheme, modifying CSS files and source code as well as updating icon colours. To ensure consistency in GUI development, we collaborated with our internal UX designer to establish design guidelines for developers. Besides the colour scheme, the guidelines define fonts, the look of widgets and how to position them on the screen. In this talk I will share our experience in implementing a cohesive dark theme, outlining the challenges and solutions encountered along the way.

Speaker: Urban Bobek

-

11:00

-

08:30

→

10:00

-

-

09:00

→

17:00

EPICS Plenary Session Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UK-

09:00

Control System Upgrades at SNS 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe EPICS based control system at SNS has been in operation since 2006. Numerous upgrades have been added over time to enhance capability and performance. Almost 20 years on, we have many obsolete hardware components which require upgrades along with corresponding software upgrades. Cyber security requirements have also changed in recent years which implies changes to the control system network and other infrastructure. This talk will describe our efforts to maintain high availability operations while also modernizing our hardware, software and corresponding infrastructure.

Speaker: Karen White (Oak Ridge National Laboratory) -

09:20

Data acquisition and processing for Zynq UltraScale+ based AMCs using high-level synthesis languages 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThis contribution presents a data acquisition and processing system implemented for a MicroTCA Advanced Mezzanine Card (AMC) that is based on an AMD Zynq UltraScale+ MPSoC. The presentation focuses on the methodology to develop custom applications on such system,

The hardware implemented into the FPGA includes the JESD204B high-speed ADC/DAC interface, as well as PCIe connectivity for data streaming to/from external systems. Furthermore, it supports high-level synthesis languages like OpenCL and HLS, reducing the development time of data acquisition and processing algorithms compared to traditional hardware description languages.

In addition, an embedded Linux is deployed using Yocto-based tools to run on the Zynq ARM cores. This enables efficient heterogeneous processing, where control and management tasks run on the processor while computationally intensive operations are offloaded to the FPGA.

The presentation focuses on the application development methodology for the system, highlighting how standard drivers and API simplify its development and maintenance.

Finally, a use case is presented in which the system is used to implement and verify a digital pulse shape analysis algorithm for signals acquired at 1GSamples/s.

Speaker: Alejandro Piñas (UPM) -

09:40

PLC PARSER TOOL FOR EPICS DATABASE GENERATION 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKIRFU software control team (LDISC2) is involved from feasibility studies to equipment deployment across various experiments that differ in size and operational duration. For many years, LDISC has been using Programmable Logic Controller (PLC) solutions to control portions of these experiments. In the context of automation programming, the organization of memory zones is crucial for efficient control and data management. When programming a PLC, our programmers must define the memory areas for inputs, outputs, data storage, and intermediate conditions; they implement logic to manipulate this data in real time. To accomplish this, our automation engineers develop with TIA Portal3, a software suite provided by SIEMENS.

Additionally, PLC supervision at IRFU based on widely used protocols such as, S7 (S7NoDave4), S7PLC5, Modbus TCP6, OCPUA7 and an in-house protocol S7CEA. As EPICS projects are expanding within our department and because EPICS also supports drivers for those protocols, it was logical to adopt this solution as a supervisory system. However, given the large number of variables being manipulated, and to prevent human errors, it was essential to develop a tool that automatically generates the database. To accelerate development, LDISC has created a PLC Parser Tool that generates an IOC EPICS database for PLC communication.

REFERENCES

[1] IRFU, http://irfu.cea.fr

[2] LDISC, Laboratory of Development and Integration of Control System

[3] SIEMENS TIA Portal https://xcelerator.siemens.com/global/en/all-offerings/products/t/tia-portal.html

[4] S7NoDave, EPICS support

[5] S7PLC, EPICS support

[6] Modbus TCP, EPICS support

[7] OPCUA, R. Lange Integrating OPC UA Devices in EPICS [doi:10.18429/JACoW-ICALEPCS2021-MOPV026]

[8] EPICS, https://epics-controls.orgSpeaker: Katy Saintin (CEA) -

10:00

EPICS and AsynPortDriver solve rapid data movement challenges 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UK3 case studies where EPICS and AsynPortDriver proved to be invaluable.

First, a rapid scope "Judgement", second a combined slow-trend and full-rate "Fault Monitor" system, and finally an IOC implementation for exploring a post-shot stored data set.Speaker: Peter Milne (D-TACQ Solutions Ltd) -

10:20

Overview of the new Omroneip ethernet/IP EPICS driver 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKOmroneip is a new EPICS asyn driver which is used primarily to communicate with OmronNJ/NX PLCs using the ethernet/IP communications protocol. This protocol is an adaptation of the CIP protocol over Ethernet. It supports the large forward open CIP specification as well as packing of CIP responses. The large forward open message specification uses connected messaging and supports single request/response packet sizes of up to 1994 bytes on OmronNJ/NX.

This driver uses the open source libplctag library which supports communications with Alan-Bradley PLCs. So theoretically this driver can also communicate with Alan-Bradley PLCs, although this has not been well tested.

Speaker: Phil Smith (Observatory Sciences LTD) -

10:30

Coffee and Tea 30m R112 Visitor Centre

R112 Visitor Centre

Rutherford Appleton Laboratory -

11:00

State of Controls at the KIT Accelerator Facilities 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe two accelerators KARA and FLUTE at the Karlsruhe Institute of Technology operate have been using EPICS for many years. In anticipation of our upcoming cSTART project, major upgrades are being made to our control system infrastructure on all levels. This includes the introduction of a fully digital camera setup based on areaDetector, migration to Phoebus for the GUI, transition to a different OPC-UA module for PLC communication, and integration of new devices, many with embedded EPICS IOCs. This is paired with a major consolidation effort in terms of server infrastucture, automated pipelines and logging services. Last but not least, we are also working on our Python layer. The main focus of this work is improving and simplifying access to the control system for a diverse group of users, from students to domain experts, and providing tools for a wide range of applications, from accessing accelerator data to the integration of machine-learning solutions. This talk will try to present the current status and outlook.

Speaker: Edmund Blomley (Karlsruhe Institute of Technology) -

11:20

EPICS in small labs, ‘Quo vadis’ hardware for data acquisition 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKWhen using EPICS in smaller laboratories, one is confronted with the task of selecting and procuring suitable hardware for data acquisition.

Above all, these are often very individual installations for which large numbers of hardware components of one type are not required. In these laboratories, there is usually no technical support that can carry out FPGA programming and design, etc.

With VMEbus, it was (and in some cases still is) possible to configure customised and practical individual systems. This no longer seems possible with modern systems such as uTCA.

Furthermore, one is confronted with the fact that proprietary laboratory systems such as gas chromatographs, spectrometers, potentiostats, etc. have to be integrated in chemistry.Speaker: Heinz Junkes (Fritz Haber Institute) -

11:40

FastCS - A framework for building device support in Python for EPICS, Tango and more 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKAt Diamond Light Source there are currently many different ways to write device support and as a result the drivers are often bespoke, don’t follow a common convention and are only understood by the engineer that wrote them. We wanted to better standardise and lower the barrier of entry to writing device drivers and to promote greater collaboration across different software groups, such as with the teams that implement the bluesky/ophyd scanning layer. Strict silos of responsibilities for different layers of the software stack leads to roadblocks, unclear APIs and difficulty solve problems that fall between the gap. By making the driver code more accessible - removing the need for specialist EPICS knowledge - a wider group of developers across software groups and beamlines can understand and contribute to the device drivers. Driver development will become more collaborative and open, with robust software engineering standards applied at the code review stage and ownership by teams rather than individuals.

With this goal in mind, we have developed a simple and flexible framework for creating device drivers in modern, idiomatic python. The driver can be tested independently of a control system and then loaded into an application with one or more transports to expose an external API, such as EPICS, Tango or GraphQL. The EPICS transport builds on the pythonSoftIOC package, hiding some of the EPICS internals and sharp corners in the API behind a simpler interface. FastCS makes it easy to dynamically define the API at runtime by introspecting a device. For example, Dectris Eiger detectors provide a REST API to query what parameters the detector has, including their datatype and limits. This means a FastCS driver for Eiger can query this API during initialisation and create (for example) EPICS PVs for all of the parameters in the API, without having to statically define them in the code - unless they are used for internal logic. Additionally, in the EPICS transport a GUI (Phoebus .bob file) is generated with all of the PVs in the IOC. The combined effect of this is if the firmware is updated on the hardware and adds a new parameter, adding the corresponding PV to the GUI just requires rebooting the IOC.

This talk will provide an overview of the FastCS architecture, demonstrate examples of static and dynamically drivers and the walk through the process of creating device support using the framework.

Speaker: Gary Yendell (DLSLtd) -

12:00

Hardware is Hard 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKA programmer learns about many of the difficulties in designing and producing custom electronics. Lesson learned developing the Osprey Quartz digitizer system.

Speaker: Michael Davidsaver (Osprey DCS) -

12:20

EPICS & Phoebus web tools survey 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThere is currently a wide variety of web tools in the EPICS community, and more and more facilities are developing or adopting them. We would like to perform a survey of tools and interested individuals, to improve visibility of these projects and to provide a space for people who are open to collaboration. A short survey will be made available, and part of the Phoebus developers session will be dedicated towards our next steps.

There will also be a short overview of Web UI plans from the Diamond Controls group.

Speaker: Martin Gaughran (Diamond Light Source) -

12:30

Lunch 1h R112 Visitor Centre

R112 Visitor Centre

-

13:30

Introduction to ADPixci 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKI will give a brief presentation discussing our work on an area detector driver for interfacing with detectors through PIXCI frame grabbers.

It currently supports two models of Raptor Photonics X-ray detectors: the Raptor Eagle XV 4240 II and the Raptor Eagle 4710 II. However, it could be expanded to support other detectors that are interfaced with via PIXCI frame grabbers.

We plan to release ADPixci and the associated Raptor Eagle XV IOC as open source software in the coming month, and I will mention how this fits into our wider goal as an organisation to open source our EPICS related software.Speaker: Irie Railton (Central Laser Facility - STFC) -

13:35

Introducing EPICS Chat 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKResponding to user requests for a discussion and support forum that is more accessible and more intimate than the Tech-Talk mail exploder, we are introducing "EPICS Chat", based on the Matrix network for secure, decentralised communication.

Speaker: Ralph Lange (ITER Organization) -

13:55

web-pvtools - PV client tools for web 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKweb-pvtools includes a series of PV client tools that can access IOCs via web browsers. This work is inspired by epics2web and pvws, and it supports both Channel Access and PV Access. It consists of a backend and a frontend, the backend is a customized version of pvws, whereas the frontend is a single page application based on Vue.js framework and provides PV tools like caget, caput, camonitor, cainfo, probe, StripTool and X/Y Plot. This talk will cover the implementation and usage of web-pvtools.

Speaker: Lin Wang (IHEP/CSNS) -

14:15

Bluesky at the ISIS Neutron and Muon Source 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe Experiment Controls team at the ISIS Neutron and Muon Source has begun adoption of the bluesky & ophyd-async libraries to implement scanning and alignment workflows on neutron & muon beamlines. Early results are promising, with several beamlines now moving from a testing phase towards production use.

This talk will describe the initial use cases from neutron reflectometers, small-angle neutron scattering instruments, and muon spectrometers, as well as facility and technique-specific implementation challenges.

Speaker: Tom Willemsen (ISIS Neutron & Muon Source) -

14:35

Introducing OAuth2 in Olog/Phoebus Software 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKIntegration of OAuth2 authentication within the Olog/Phoebus system, a key component of the EPICS infrastructure. The current service architecture involves multiple authentication methods, each implementing its own authentication mechanisms, leading to challenges such as inconsistent authorization flows, credential exposure and maintenance difficulties. The proposed solution leverages OAuth2, an access delegation protocol, to unify authentication across services, enhancing security and ease of access. The presentation outlines the benefits of token-based access control, various OAuth2 authorization flows, and its implementation strategy in Olog/Phoebus. This transition aims to streamline authentication processes while ensuring robust security measures for EPICS software applications.

Speaker: Giovanni Lorenzo Napoleoni (INFN) -

14:55

Python Accelerator Middle Layer 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe accelerator community makes use of a suite of tools called the 'Matlab Middle Layer', or MML. This provides an accelerator-agnostic interface alongside a large number of high level applications, such as slow orbit feedback, beam-based alignment, and others. Originally developed over 20 years ago, accelerator physicists and other interested people have grouped together to develop a replacement system using Python. This is called the Python Accelerator Middle Layer (or PyAML) project.

This talk is a brief overview of the project's goals. We would also like to reach out to any people who are interested in joining the collaboration.

Speaker: Martin Gaughran (Diamond Light Source) -

15:00

Coffee and Tea 30m R112 Visitor Centre

R112 Visitor Centre

-

15:30

oac-tree - Using behavior trees to automate operations and control 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKITER's operation requires complex automation sequences that are beyond the scope of the finite state machine concept that the EPICS SNL Compiler/Sequencer implements.

The Operations Applications group at ITER is developing oac-tree (Operation, Automation and Control using Behavior Trees), a new sequencing tool based on behavior trees, which has been successfully used in its first production applications.

This overview talk will present the current status and future plans of the project.Speaker: Walter Van Herck (ITER Organization) -

15:50

The IBEX web dashboard 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe IBEX Web Dashboard

Scientists, PhD students, commercial users and operational staff at the ISIS Neutron and Muon Source rely on a web dashboard in order to remotely monitor beamline-specific parameters crucial to operations.

Previously, we have used the CS-Studio Eclipse RDB Archive Engine and a bespoke server to parse information from this from the instruments, but this is a difficult solution to maintain.

We have since written a frontend which leverages Phoebus' PV Web Socket (PVWS) module to subscribe to EPICS PVs on instruments themselves, then updates are displayed in a React-based framework via a websocket.

This talk will explain initial research and implementation details on the new dashboard, along with the benefits of using PVWS over scraping the ArchiveEngine.Speaker: Jack Harper (STFC) -

16:10

Development of Phoebus Applications in CSNS 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKWe introduce the development of the aplications based on Phoebus in CSNS. We made some modification to the alarm system and the alarm log table. We also created some new plugins to the Data browser. We developed a very fast Frontend-Backend-Separation snapshot management software solution

Speaker: Mingtao Li -

16:30

Designing documentation for an EPICS development and deployment framework 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKIn the context of EPNix, a development and deployment framework for EPICS IOCs and EPICS-related software, I will share my thoughts about writing documentation:

- how do we architecture this documentation,

- the thought process before writing,

- the writing style,

- and how do we enforce a consistent writing style

Speaker: Rémi Nicole (CEA IRFU/DIS/LDISC) -

16:50

CONTROL SYSTEM STUDIO FOR MUSCADE 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe LDISC2 software control team is involved from feasibility studies to the deployment of equipment, covering low level (hardware, PLC) to high level (GUI supervision). For their experiments, the LDISC team is deploying two mains’ frameworks:

• MUSCADE3, a full Java in-house solution, a micro embedded SCADA (µ SCADA) dedicated to small and compact experiments controlled by PLCs (Programmable Logic Controller)

• EPICS4, a distributed control systems designed to operate devices such as particles accelerators, large facilities and major telescopes.EPICS framework provides tools developed in Java such as Archive Appliance (the archiving tool), Phoebus Control-Studio4 (GUI), and Display Web Runtime (Web Client), and Phoebus Alarm service. Regarding graphical interfaces, and according to the "GUI Strategies" workshop at ICALEPCS 2023 (Cap Town), CS-Studio, CSS or Phoebus software are the most commonly used solutions for EPICS clients. Several institutes, including IRFU, are progressively migrating to Phoebus.

Currently, MUSCADE users require intuitive tools to build their GUIs. The initial developer provided a tool to convert AutoCAD documents into Java Swing applications. In 2018, LDISC developed a conversion tool to generate CSS and Phoebus GUIs from AutoCAD. Additionally, LDISC has developed a Muscade to EPICS bridge to connect the GUI with the Channel Access protocol.

In 2023, the Muscade roadmap defined a new strategy to use a common set of client tools with EPICS, to streamline development efforts. Since then, IRFU realizes several developments to adapt Phoebus for Muscade. Phoebus architecture offers a plugin mechanism to integrate new data sources, new historical sources, and the software is perfectly suited for this purpose.

References

[1] IRFU https://irfu.cea.fr/en/

[2] LDISC https://irfu.cea.fr/dis/en/Phocea/Vie_des_labos/Ast/ast_groupe.php?id_groupe=4616

[3] MUSCADE https://irfu.cea.fr/dis/products/www/muscade/

[4] Phoebus Control-Studio https://control-system-studio.readthedocs.io/Speaker: Katy Saintin (CEA)

-

09:00

-

09:00

→

17:00

-

-

09:00

→

17:00

EPICS Plenary Session Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UK-

09:00

IFMIF-DONES Control Systems: General Architecture 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKIFMIF-DONES (International Fusion Materials Irradiation Facility - DEMO-Oriented NEutron Source) is a cutting-edge neutron irradiation facility under construction as part of the European fusion roadmap. Located in Granada, Spain, its primary objective is to validate and qualify materials to be used in fusion reactors. The construction phase, initiated in March 2023 following the first DONES Steering Committee, is progressing under the framework of the DONES Programme, with initial in-kind contributions, including those related to the Control Systems, currently being formalized.

The IFMIF-DONES Control Systems are structured into two levels: the Central Instrumentation and Control Systems (CICS) and the Local Instrumentation and Control Systems (LICS), interconnected through a complex network infrastructure. The CICS comprises three control systems: Control, Data Access and Communication (CODAC), responsible for implementing normal operation by providing central supervision and control, timing management, data management, alarm and warning handling, system administration, and software; Machine Protection System (MPS), ensuring machine protection functions against facility failures, CODAC malfunctions, or potential operational errors; and Safety Control System (SCS), that implements the safety functions to protect personnel and the environment. Several of the technologies envisioned for these systems have been extensively tested in recent years through the LIPAc prototype. This presentation will provide a detailed overview of the IFMIF-DONES Control Systems architecture, focusing on the evaluation process of the most suitable control framework by comparing other solutions with EPICS.Speaker: Celia Carvajal Almendros (CONSORCIO IFMIF-DONES ESPAÑA) -

09:20

EPICS at IFMIF-DONES: functionality and possible improvements 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKIFMIF-DONES (International Fusion Materials Irradiation Facility - DEMO-Oriented NEutron Source) is an accelerator-based neutron irradiation facility being constructed in Granada, Spain, as part of the European fusion roadmap. Its primary objective is to generate a neutron field with a fusion-like energy spectrum to test materials for their use in fusion reactors. The construction of IFMIF-DONES is supported by in-kind contributions from various countries, with the host country contributing, among others, to the Control System.

The IFMIF-DONES Control System is functionally divided into three systems: the Machine Protection System (MPS), the Safety Control System (SCS) and the Control, Data Access and Communication (CODAC) system. The MPS is designed to protect the facility itself, while the SCS is responsible for ensuring the safety of plant personnel and the environment. This contribution will deal with the CODAC system, which is essential for the normal operation of the plant. The Experimental Physics and Industrial Control System (EPICS) has been selected as the control framework for CODAC.

This contribution will present the various functions of CODAC, ranging from supervisory control to data and software management, as well as the EPICS features used to accomplish them. Additionally, potential areas for improvement in EPICS to enable full CODAC functionality will be highlighted.

Speaker: Dr Manuel J. Gutiérrez (IFMIF-DONES) -

09:40

Ophyd-async: status and roadmap 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKBluesky facilities have been using ophyd as a comprehensive hardware abstraction layer for step scanning use cases but legacy constraints have made it difficult to move towards flyscanning. We present an update on the status of ophyd-async and a roadmap of future features.

Speaker: Tom Cobb (DLSLtd) -

10:00

Thoughts on improving programming practices in EPICS 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKFor years now, the push to adopt memory-safe languages in lieu of C and C++ has been growing steadily. On the management side, it was spurred by various government-issued orders and directives. Among programmers, it was spurred by appearance of Rust as a viable contender to replace C++. The "rewrite the world" trend is going strong. However, there are open questions on how to transition an entire ecosystem, especially one such as EPICS where backwards compatibility is a high priority. It seems inevitable that introducing a memory-safe language into EPICS core is going to be a slow process.

Using a modern memory-safe language improves the quality of software because, unlike C/C++, both the language and the library ecosystem force the programmer to adopt practices and a mindset that are quite different from those of traditional C++. I would like to put forward the notion that modern C++ allows the programmer to adopt such a mindset as well, with the advantage of excellent backwards compatibility afforded by C++. To demonstrate that, I present three examples, ranging from a humble scope guard (a small utility for managing resources when interfacing with legacy C code), through a different take on managing inter-thread locking (a demonstration of a change in mindset), to a re-imagining of the user-facing API for the aSub record.

Speaker: Jure Varlec (Cosylab d.d.) -

10:20

Rust anyone ? 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKIn the recent past rust has gained my interest.

This talk should give inspiration that rust can be used to

write useful code:A simplified simulator for a motion controller.

Speaker: Torsten Bögershausen (ESS) -

10:30

Coffee and Tea 30m R112 Visitor Centre

R112 Visitor Centre

-

11:00

Update on EPICS Deployment at Fermilab 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKFermilab's home grown control system is now being supplemented with EPICS controls for the new accelerator, PIP-II. The two control systems will be required to operate side-by-side for the foreseeable future, where the EPICS controls is treated as green field. Here I will give a description of the deployment of our software infrastructure of "pure" PVXS with multicast, and report on the progress and lessons learned.

Speaker: Pierrick Hanlet (Fermi National Accelerator Laboratory) -

11:20

Recent developments and plans for EPICS 7 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKEPICS 7.0.9 was released in February 2025. This talk will cover what’s new and what changed in that release, some things to expect in future releases, and when the Core Developers propose to drop support for VxWorks, RTEMS-4 and some of the older MS Windows compilers.

This work is supported in part by the U.S. Department of Energy, Office of Science, Office of Basic Energy Sciences, under Contract No. DE-AC02-06CH11357.

Speaker: Andrew Johnson (Argonne National Laboratory) -

11:40

EPICS Cybersecurity Update 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKSecure PVAccess (SPVA) enhances the existing PVAccess protocol by integrating Transport Layer Security (TLS) with comprehensive Certificate Management, enabling encrypted communication channels and authenticated connections between EPICS clients and servers (EPICS agents).

This Project, funded by the the Department of Energy and Awarded to Stanford National Accelerator Laboratory, has been running for over a year and we are in the process of testing it in a number of Laboratories (SLAC, ISIS, FNAL, ORNL), integrating with their authentication systems including Kerberos and LDAP, to provide a robust integration with EPICS Security (ACF) for PV access control.

Here's an update of the state of the project, software availability, and a call for adopters especially LDAP and OAuth. Come to the workshops for a deeper dive:

- Thurs. 11:15 - 12:45: CyberSecurity Developers workshop

- Fri. 09:30 -12:45: CyberSecurity Administrators

Speaker: George McIntyre (Level N Ltd) -

12:00

Integrated control of a chip scanner for time-resolved crystallography at the NSLS-II FMX beamline 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe FMX (Frontier Microfocusing Macromolecular Crystallography) beamline at the NSLS-II light source has developed a new experimental station for fixed target time-resolved serial crystallography on biological systems. We present here the controls-system for a chip scanner which enables the rapid collection of large numbers of room temperature crystallographic measurements on biological samples. In addition to static measurements, samples can be excited in a pump-probe scheme by the injection of compounds suspended in liquid through a microdrop dispensing system, at timed intervals preceding the measurement. Enabling this has required the implementation of a full stack integrated solution, involving direct programming of the powerPMAC motion controller, control of motion, triggering and detectors through EPICS, data collection through Ophyd/Bluesky, and the implementation of an optional GUI for control of the experiment. Here, I will outline the components involved in this process, as well as the successes and pitfalls which we have encountered during the implementation and testing of the scanner.

Speaker: Robert Schaffer (Brookhaven National Laboratory) -

12:05

PVXS Update 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKRecent changes to the PVXS module.

Speaker: Michael Davidsaver (Osprey DCS) -

12:10

Phoebus Archiver Datasource 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe EPICS Archiver Appliance is a vital part of the EPICS technology stack, storing PV data that is essential for data analysis and diagnostics. We have developed a new Phoebus datasource that allows archived data to be accessed as if it were a live PV. This provides a powerful tool for debugging client behavior and developing applications that interact with simulated control system elements, guided by real-world archived data.

Speaker: kunal shroff -

12:15

TRISHUL Facility Update 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThis talk provides an update on the upcoming TRISHUL facility at TIFR Hyderabad, which will use a high-intensity, ultra-short petawatt laser for research activities and the key plan is to integrate an EPICS-based control system to enable seamless operations.

Speaker: Ms Sathvika Gambheerrao (Tata Institute of Fundamental Research) -

12:30

Lunch 1h R112 Visitor Centre

R112 Visitor Centre

-

13:30

Introduction to the software and hardware platforms for the Pre-Project of the ICONE Accelerator 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKICONE is a pre-project that aims to develop an innovative compact neutron source using HiCANS technology (High-Current Accelerator-driven Neutrons Sources). The CEA IRFU, thanks to its extensive experience with various accelerators such as SPIRAL2, ESS, IPHI, and SARAF, is responsible for the design of the Linac. This presentation will introduce our updated software and hardware platforms that are currently in use or in test and planned for implementation on ICONE.

On the software side, we will use EPNix, an EPICS environment based on Nix. This platform will facilitate the installation of all phoebus galaxy software packages (alarm, save-and-restore …etc) and the deployment of EPICS IOCs. On the hardware front, we will use the MTCA.4 platform, which includes NAT crates, IOxOS, and D-TACQ acquisition cards for fast data acquisition. The timing system will be managed by MRF cards. Siemens PLCs will handle critical safety processes such as vacuum control, interlocks, and cooling systems. Additionally, Beckhoff systems will be used for non-critical slow acquisitions and motorization tasks.Speaker: Alexis Gaget (CEA/IRFU) -

13:50

Development of a customised wrapper for p4p 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe controls system for the ISIS accelerator is being migrated from using the commercial software Vsystem to EPICS. The primary protocol used for transporting process variables (PVs) across the network is pvAccess and the Python-based software p4p is used to create servers that provide access to process variables using PVA servers. A custom wrapper for p4p is being implemented to simplify and standardise the way in which PVA servers work. This will allow users to easily create PVA servers for their own devices whilst allowing automatic registration with other services, for example ChannelFinder.

Speaker: Ajit Kurup (Imperial College) -

14:10

ESS' Controls Ecosystem 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe European Spallation Source (ESS) is currently undergoing accelerator commissioning and already has more than 3,000 IOCs registered, producing over 8 million process variables (PVs). To manage this scale in a sustainable and maintainable way, we have been developing the Controls Ecosystem (CE): a management system designed to streamline the lifecycle of control system components. CE is a Java and React-based system that leverages Git and Ansible to enable reproducible and high-throughput deployment of hundreds of IOCs per day. Beyond deployment, CE provides integrated support for versioning, traceability, and auditability—key features for a facility of this scale. It interfaces with other core systems at ESS, such as ChannelFinder and Prometheus, forming a foundation for reliable, automated, and scalable control system operations. Particular attention has also been paid to the user experience, with a modern interface designed to be as intuitive as possible, while still allowing power users to work unrestricted via the REST API. This presentation will introduce the UI, architecture, and current capabilities of CE.

Speaker: Anders Lindh Olsson (ESS) -

14:30

Controlling ESS Timing System using EPICS Normative Types 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe European Spallation Source Timing System is based on MRF hardware and mrfioc2 EPICS module and driver. In order to provide a flexible and EPICS based way to control our Timing System a software interface based on Normative Types was developed. This new interface was built using PVXS C++ library and the EPICS Normative Type used was the NTTable. The Timing IOC, CSStudio/Phoebus and associated middleware services are some of the software currently supporting the NTTables as part of this solution.

On this talk we will share all the steps, challenges and related implementations we had to perform in order to provide a complete software suite which allows controlling ESS Timing System in a very flexible way relying on NTTables.Speaker: Gabriel de Souza Fedel (ESS) -

14:50

Implementing a P4P-Based Serial Driver for Moxa TCP Communication with ISIS Serial Devices 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe ISIS Controls System transition to EPICS instersects with legacy hardware upgrades. This work presents the development of a robust EPICS-based (p4p) serial driver for interfacing with serial devices, mainly power supplies, via Moxa TCP terminal servers. The driver supports multiple serial device types, handling reads, writes, and instructions with redundant data restoration and alarm management. Key features include thread-safe PV monitoring, real-time serial communication, and automated recovery mechanisms. This implementation enhances reliability and scalability of Controls hardware, offering a flexible solution for integrating diverse serial-based systems into the ISIS EPICS Control System.

Speaker: Nadir Bouhelali (STFC - ISIS) -

15:00

Coffee and Tea 30m R112 Visitor Centre

R112 Visitor Centre

-

15:30

LabIOC - Channel Access server and client in pure LabVIEW 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKWe introduce a LabVIEW library implemening Channel Access client and server. Server can host basic EPICS records and works as EPICS IOC with device support for LabVIEW. The library has no dependencies outside LabVIEW and can be easily deployed to any National Instruments target.

Speaker: Karel Majer (ELI ERIC) -

15:35

A PVAccess library for LabVIEW 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe ISIS Accelerator Diagnostics group uses National Instruments PXI and cRIO hardware, programmed with LabVIEW, for our data acquisition systems. As we are transitioning to EPICS as our control system, a need has arisen for a direct LabVIEW to PVAccess interface. This talk will cover my progress from requirements to a working prototype that integrates the PVXS C++ library into the LabVIEW ecosystem. A beta release will soon be made to gather feedback on usability, performance and compatibility from other EPICS and LabVIEW users who will have differing use cases and hardware.

Speaker: Ross Titmarsh (STFC) -

15:55

RecSync-rs: A Rust/Python implementation of RecCaster 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKWith the increasing use of p4p IOCs at the ISIS Neutron and Muon Source there is a need for RecCaster Python integration to allow, for example, use of ChannelFinder. The current implementation of the EPICS RecCaster tool is written in C++/C and is dependent on the EPICS base/modules libraries. Recsync-rs, a RecCaster library in Rust, and PyRecCaster Python bindings with cross-platform support are presented.

Speaker: Aqeel Alshafei (STFC) -

16:00

pvAccess and Virtualisation 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKNotes on using the pvAccess protocol in a virtualised environment, with an emphasis on our use with Docker Swarm. pvAccess's use of UDP broadcast for search and beacon messages presents problems in a virtualised environment. We outline our solutions utilising the EPICS_PVA_NAME_SERVERS environment variable, PVA Gateways, and UDP broadcast relays.

Speaker: Ivan Finch (STFC) -

16:20

Channel Finder Metrics 20m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKShow how you can now configure Channel Finder to calculate metrics of number of channels per a property with a value, or per tag.

Speaker: Sky Brewer (ESS) -

16:40

EPICS containers @INFN—LNF: EPIK8s 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKThe presentation presents the current status of migrating INFN-LNF custom control systems to EPICS using EPIK8s, a containerization and orchestration framework that seamlessly integrates EPICS applications into Kubernetes environments. EPIK8s, an implementation of the DLS epics-containers framework developed at INFN-LNF, leverages modern container technologies to overcome the challenges of traditional deployment methods in scientific control systems. Despite limited manpower and specialized EPICS expertise, we have successfully deployed EPICS along with supporting services on INFN-LNF beamlines—including SPARC, BTF, and a remote ELI beamline.

This achievement demonstrates that combining containerization, orchestration, and CI/CD pipelines dramatically improves the deployment process for EPICS controls in new beamlines. Key benefits include maximized development speed through containerized environments that facilitate sharing and reuse, the ability to package IOC software in lightweight virtual environments, and the flexibility to run and test applications anywhere—from local laptops to remote facilities. Additionally, leveraging Kubernetes together with ArgoCD enables centralized orchestration and management of all IOCs and services, allowing control system teams to focus on application development and control services rather than on IT infrastructure challenges.

Speaker: Dr Andrea Michelotti (INFN) -

16:45

Rapid Device Support Development Using Hardware Abstraction Server and P4P 5m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UKDevelopment of device support for EPICS software infrastructure is challenging process, it requires in-depth knowledge of EPICS device drivers, database records, IOC toolchain and EPICS GUI. Due to complexity of the EPICS PVA protocol, implementation of the IOC on embedded hardware is practically impossible, especially for multi-client applications. To simplify this task a simple hardware abstraction server (HABS) have been developed. The HABS is small set of C/C++ functions which allows to convert user code to a point-to-point server. The server represent key device parameters as process variables. It serves get(), set() and info() requests and streams continuous measurements to a client. The data format of client-server communication is CBOR (a binary JSON model). Multiple requests can be executed during single transaction. The HABS' client is p4p-based softIocPVA, it generates EPICS IOC automatically, obtaining all necessary information from the info() request. The server and softIocPVA can run on the same host, in that case the standard Linux IPC client-server protocol is used; or the server could be embedded inside the device firmware, communicating with softIocPVA using RS232 or TCP-IP.

This approach have been implemented in a testbench for ALS-U magnet power supplies and for multi-channel Analog/Digital signal processing unit, based on STM32G431 micro-controller.Speaker: Andrei Sukhanov (Brookhaven National Laboratory)

-

09:00

-

09:00

→

17:00

-

-

09:00

→

15:00

Frontend: BlueSky Hamilton Room (R112 Visitor Centre)

Hamilton Room

R112 Visitor Centre

User facing applications

Convener: Mr Marcel Bajdel (HZB)-

09:00

BlueSky 1h 30mSpeaker: Marcel Bajdel (HZB)

-

11:00

BlueSky 1h 30mSpeaker: Marcel Bajdel (HZB)

-

13:30

BlueSky 1h 30mSpeaker: Marcel Bajdel (HZB)

-

09:00

-

09:00

→

10:30

Hardware and Hardware Interfaces: EPICS 7 CR12 + CR13 (R68)

CR12 + CR13

R68

Hardware and hardware interfaces relevant to EPICS. Includes work on IOCs.

Convener: Ralph Lange (ITER Organization) -

09:00

→

12:30

Hardware and Hardware Interfaces: Timing 1 CR03 (R61)

CR03

R61

Hardware and hardware interfaces relevant to EPICS. Includes work on IOCs.

Convener: Pilar Gil-

09:00

Timing 1h 30mSpeaker: Pilar Gil

-

11:00

Timing 1h 30mSpeaker: Pilar Gil

-

09:00

-

10:30

→

11:00

Coffee and Tea 30m R112 Visitor Centre

R112 Visitor Centre

-

11:00

→

15:00

Backend or Services: Containers CR12 + CR13 (R68)

CR12 + CR13

R68

Convener: Giles Knap (DLSLtd)-

11:00

Containers 1h 30mSpeaker: Giles Knap (DLSLtd)

-

13:30

Containers 1h 30mSpeaker: Giles Knap (DLSLtd)

-

11:00

-

11:00

→

12:30

Backend or Services: CyberSecurity Developers Main Lobby (R112 Visitor Centre)

Main Lobby

R112 Visitor Centre

Conveners: George McIntyre (Level N Ltd), George McIntyre (Level N)-

11:00

CyberSecurity Developers 1h 30mSpeakers: George McIntyre (Level N), George McIntyre (Level N Ltd)

-

11:00

-

12:30

→

13:30

Lunch 1h Diamond Atrium (R83 Diamond Atrium)

Diamond Atrium

R83 Diamond Atrium

Lunch provided at the R83 Diamond Atrium

-

13:30

→

17:00

Backend or Services: Phoebus Middleware Main Lobby (R112 Visitor Centre)

Main Lobby

R112 Visitor Centre

Conveners: Mr Georg Weiss (ESS), Sky Brewer (ESS), kunal shroff-

13:30

Phoebus Middleware 1h 30m Main Lobby

Main Lobby

R112 Visitor Centre

Speakers: Georg Weiss (ESS), Sky Brewer (ESS), kunal shroff -

15:30

Phoebus Middleware 1h 30m Main Lobby

Main Lobby

R112 Visitor Centre

Speakers: Georg Weiss (ESS), Sky Brewer (ESS), kunal shroff

-

13:30

-

13:30

→

17:00

Hardware and Hardware Interfaces: µTCA CR03 (R61)

CR03

R61

Hardware and hardware interfaces relevant to EPICS. Includes work on IOCs.

Convener: Alan Justice (Oak Ridge National Laboratory/Spallation Neutron Source)-

13:30

µTCA 1h 30m CR03

CR03

R61

Speaker: Alan Justice (Oak Ridge National Laboratory/Spallation Neutron Source) -

15:30

µTCA 1h 30m CR03

CR03

R61

Speaker: Alan Justice (Oak Ridge National Laboratory/Spallation Neutron Source)

-

13:30

-

15:00

→

15:30

Coffee and Tea 30m R112 Visitor Centre

R112 Visitor Centre

-

15:30

→

17:00

EPICS Council Meeting Cr22 (R1)

Cr22

R1

-

15:30

→

17:00

Frontend: OACTree Hamilton Room (R112 Visitor Centre)

Hamilton Room

R112 Visitor Centre

User facing applications

Convener: Mr Walter Van Herck (ITER) -

15:30

→

17:00

Hardware and Hardware Interfaces: Motion Control CR12 + CR13 (R68)

CR12 + CR13

R68

Hardware and hardware interfaces relevant to EPICS. Includes work on IOCs.

Convener: Torsten Bögershausen (ESS)

-

09:00

→

15:00

-

-

09:00

→

12:30

Backend or Services: CyberSecurity Administrators Hamilton Room (R112 Visitor Centre)

Hamilton Room

R112 Visitor Centre

Convener: George McIntyre (Level N)-

09:00

CyberSecurity Administrators 1h 30m Hamilton Room

Hamilton Room

R112 Visitor Centre

Speaker: George McIntyre (Level N) -

10:30

Coffee and Tea 30m

-

11:00

CyberSecurity Administrators 1h 30m Hamilton Room

Hamilton Room

R112 Visitor Centre

Speaker: George McIntyre (Level N)

-

09:00

-

09:00

→

12:30

Frontend: Phoebus Developers CR03 (R61)

CR03

R61

User facing applications

Convener: Kunal Shroff- 09:00

-

10:30

Coffee and Tea 30m

-

11:00

Phoebus Developers 1h 30m CR03

CR03

R61

Speaker: kunal shroff

-

09:00

→

10:30

Hardware and Hardware Interfaces: Timing 2 CR16 + CR17 (R80)

CR16 + CR17

R80

Hardware and hardware interfaces relevant to EPICS. Includes work on IOCs.

-

10:30

→

11:00

Coffee and Tea 30m R112 Visitor Centre

R112 Visitor Centre

-

11:00

→

12:30

Hardware and Hardware Interfaces: OPC-UA CR16 + CR17 (R80)

CR16 + CR17

R80

Hardware and hardware interfaces relevant to EPICS. Includes work on IOCs.

Convener: Ralph Lange (ITER Organization) -

12:30

→

13:30

Lunch 1h Diamond Atrium (R83 Diamond Atrium)

Diamond Atrium

R83 Diamond Atrium

-

13:30

→

15:00

Breakout and Interest Groups 1h 30m Pickavance Lecture Theatre/Visitor Center

Pickavance Lecture Theatre/Visitor Center

ISIS Neutron and Muon Source

Rutherford Appleton Laboratory Harwell Campus, Didcot Oxfordshire, OX11 0QX. UK -

13:30

→

15:00

EPICS Council & EPIC Core Developers Joint Meeting 1h 30m Hamilton Room (R112 Visitor Cenre)

Hamilton Room

R112 Visitor Cenre

By invitation only

-

15:00

→

17:00

Backend or Services: Python Special Interest Group Hamilton Room (R112 Visitor Centre)

Hamilton Room

R112 Visitor Centre

Conveners: Ivan Finch (STFC), Michael Davidsaver (Osprey DCS), Tom Cobb (DLSLtd)-

15:30

Python 1h 30m

Test

Speaker: Ivan Finch (STFC)

-

15:30

-

09:00

→

12:30